How to run ChatGPT Privately on your Laptop

Learn How to run ChatGPT Privately on your Laptop in less than 5 minutes! You don't even need to be connected to internet. (after you downloaded everything)

One of the main reasons to run a Large Language Model (LLM) on your laptop or your own server is for Privacy. Many enterprises especially in highly regulated industries like Insurance and Finance can't just use ChatGPT or Github Copilot. The prompt might not be a big issue, but when you want to send the context with real data, you can't just send trade secrets (could be software code) or PHI to an external company. Surely it can be done, but the regulations are not fully caught up.

What is the Solution?

Run them privately, therefore don't even transfer anything over the internet. You don't even need to be connected to internet.

All the actual models that are used in Google Bard, Google Gemini, OpenAI and AzureAI and all that AWS offers are private; you can't just download and enhance them. Facebook has released a Model that can be downloaded and used, however the licensing doesn't allow modification.

A French company called Mistral, out of goodness of their heart have actually released a completely open source model called: Mixtral-8x7B under Apache 2 license. Yaaaay!!

This means download the model and train it aka modify the model to be good at somethings that is more relevant to your job, Or you might want to open it to be more biased. Maybe you want a more Religious Model or a Racist Model, Something that is better in coding, or ...

The other benefit to downloading the model is actually you do not need to be connected to Internet. The AI works completely offline after you download it.

The model outperforms ChatGPT 3.5 and LLaMA 13B:

We introduce Mistral 7B v0.1, a 7-billion-parameter language model engineered for superior performance and efficiency. Mistral 7B outperforms Llama 2 13B across all evaluated benchmarks, and Llama 1 34B in reasoning, mathematics, and code generation. Our model leverages grouped-query attention (GQA) for faster inference, coupled with sliding window attention (SWA) to effectively handle sequences of arbitrary length with a reduced inference cost. We also provide a model fine-tuned to follow instructions, Mistral 7B -- Instruct, that surpasses the Llama 2 13B -- Chat model both on human and automated benchmarks. Our models are released under the Apache 2.0 license.

Here is the link to the Paper: https://arxiv.org/abs/2310.06825

How to run it locally?

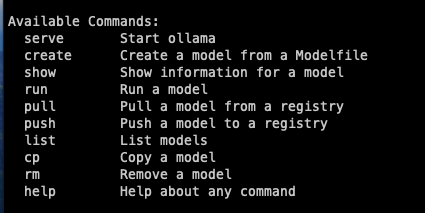

The easiest and fastest that you can be up and running in 5 minutes, is by using Ollama.

you can download it from here: https://github.com/jmorganca/ollama

If you are on Windows, you can run it under WSL (Windows Subsystem for Linux)

Follow the instructions here to get Ubuntu running on your PC ;)

https://learn.microsoft.com/en-us/windows/wsl/

if you are unsure which one, my recommendation is to go with WSL 2. that is an actual Linux kernel running on you PC, therefore you can install any other Linux application on your PC too.

once it is installed, open a command prompt and you can interact with Ollama:

you can just call run mistral and that's it:

ollama run mistral

Now you can simply type and it will answer the same way that you use Bard or ChatGPT.

If running multimodal models, it can, for example, analyze pictures too (from the website):

>>> What's in this image? /Users/jmorgan/Desktop/smile.png

The image features a yellow smiley face, which is likely the central focus of the picture.Ollama also provides a REST API, which is fantastic!

curl http://localhost:11434/api/chat -d '{

"model": "mistral",

"messages": [

{ "role": "user", "content": "why is the sky blue?" }

]

}'

Endless Possibilities

Since you don't even need internet connection, this can be embedded in all kinds of devices. Most of these Models are general purpose, meaning you can ask questions about what is the best feed for chickens and the next question could be why this line in Python is throwing an error.

For example, you can run it on a RaspberryPi or any other embedded device that has at least 8G Ram, then have a camera taking pictures every minute from a street. Then you can ask, have you seen any Dogs on the street in the last hour?

Resources

lots of good info and tips on Ollama's github page:

https://github.com/jmorganca/ollama

You should also know about Hugging Face.

It is the AI community, the start of the rabbit hole. It has all kinds of models, and data sets, you can also rent GPUs basically to train your models.

https://huggingface.co

WSL: https://learn.microsoft.com/en-us/windows/wsl/

That's a wrap. Thank you for your time